Cull Groups Online

Facebook was a place to catch up with friends, join groups, or see cute baby animals. Today, nearly all the “fun content” pages on every social media platform are AI generated, pushing “slop”. Building followers and rank with AI content, then selling the account to malignant actors, is an industry with real consequences, from fraud to elections. Fight back by focusing your online attention on real people, trying hard.

Why we do it:

Debate rages on whether generative AI is our salvation or our destruction. Few land in the middle, where the truth usually resides. What is clearly bad is this: it is being heavily leveraged to manipulate social media. If Russia’s efforts in 2016 put a thumb on our electoral scale, we now face a firehose.

To understand how online actors manipulate public perception in elections, we strongly recommend Maria Ressa’s How to Stand up to a Dictator. In short, bad actors can spread information much more quickly than those bound to the truth. A recent change to X revealed high-profile right-wing accounts as based in other nations—AI didn’t invent this problem, but it accelerates it. In the past year AI “slop” has exploded. These are the stories you share with “probably not real, but I kinda want it to be!” or “I had no idea that’s what a baby peacock looked like!” (because it doesn’t.)

These feel harmless, but they fuel rank for accounts that are then sold on the dark web to fraudsters and political operatives alike. We fight back by siloing that content: isolate it into an AI fantasy world of its own. If we do that, it’ll proselytize to itself next election cycle.

How to do it:

The simplest approach is to leave or unfollow any group you identify AI stories on. Because AI is getting better, you may miss some, so if you notice a couple posts that are clearly fake, and there’s no action from the group, leave. You’re probably missing more. If you see a post shared by a friend that is clearly AI, block the account that made the post. If you have a friend who insists on sharing AI content, consider silencing their feed.

For a more aggressive approach, go through your groups checking these elements:

- age of group (the more recent the riskier)

- are there rules/questions new members answer?

- is there an AI policy?

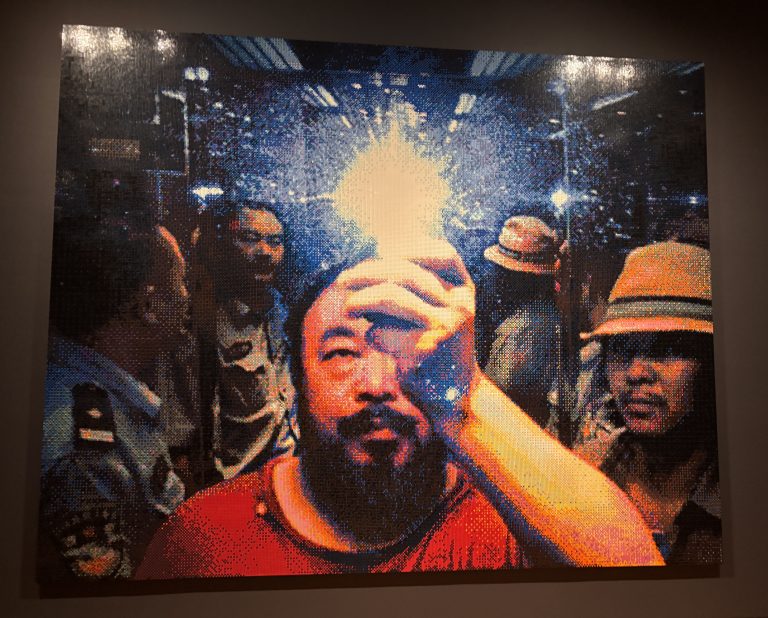

- are there named/interactive admins you can identify? (This is one reason Shasta put her real face and name on this site. Trust requires trust.)

- is the content mostly real or mostly fake?

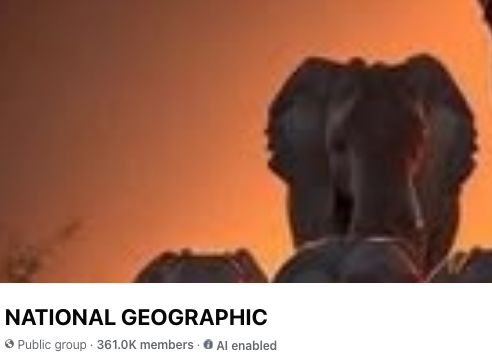

- is the group cashing in on a name brand that isn’t theirs? Look for signs the group is a “fan group” or otherwise not official. (example in image.)

- if you skim down their posts, do some seem out of place? e.g. the site we took the image of has a post about a Nigerian man claiming to be Elon Musk’s “eldest son”.

For the most restrictive approach, refuse to follow/join any group you can’t positively trace to real humans. Be particularly suspicious of groups that are recent and grew quickly. It takes time to build real accounts. Having ten thousand of your closest bot friends boost you definitely helps.

By routinely suppressing the distribution of AI content, we devalue it and discourage this tactic.